The software development fields’s constant shift in landscape is not something that was threatened by generative AI becoming mainstream.

If we refer to web development, the statement is also correct. With browser battles, the new frontier that this web ecosystem felt in the early 2000’s of the .com burst, on top of it, adding the technological advancements in terms of hardware as well, with expansion in the late 2000’s of WWW to mobile world, and now basically everywhere, we can even argue that in this landscape change is constant.

We might even debate that this field was amongst the first who tried to adopt AI at any pace, or to leave the ‘AI will rule the world‘ scarce aside and test things out. We have many jokes in this prospect, ranging from ‘we are waiting to be automated since the early 2000’s“, to “finally, robots will finally get a sneak peek of our burnout phases”.

Although this repetitiveness in terms of discussing Large Language Models (LLM’s) does feel numbing with each article, we will like to give a cold analysis that looks at the current state of ‘AI‘, or near future. Basically, it’s of the opposite spectrum from tech headlines would have you believe.

All the data, personal usage and findings point towards augmentation. Replacement is nowhere near.

According to Stack Overflow’s 2024 survey, 76% of developers are already embracing AI tools. However, interesting stuff comes right after: the same (amount of) developers who use these tools don’t necessarily trust them.

This paradox perfectly captures the state of AI in software development. Powerful enough to be indispensable, yet not reliable enough to be left unsupervised.

But before diving deep into the findings, we would like to first take a step back and analyse the real tangible impact that LLM’s are doing in software development.

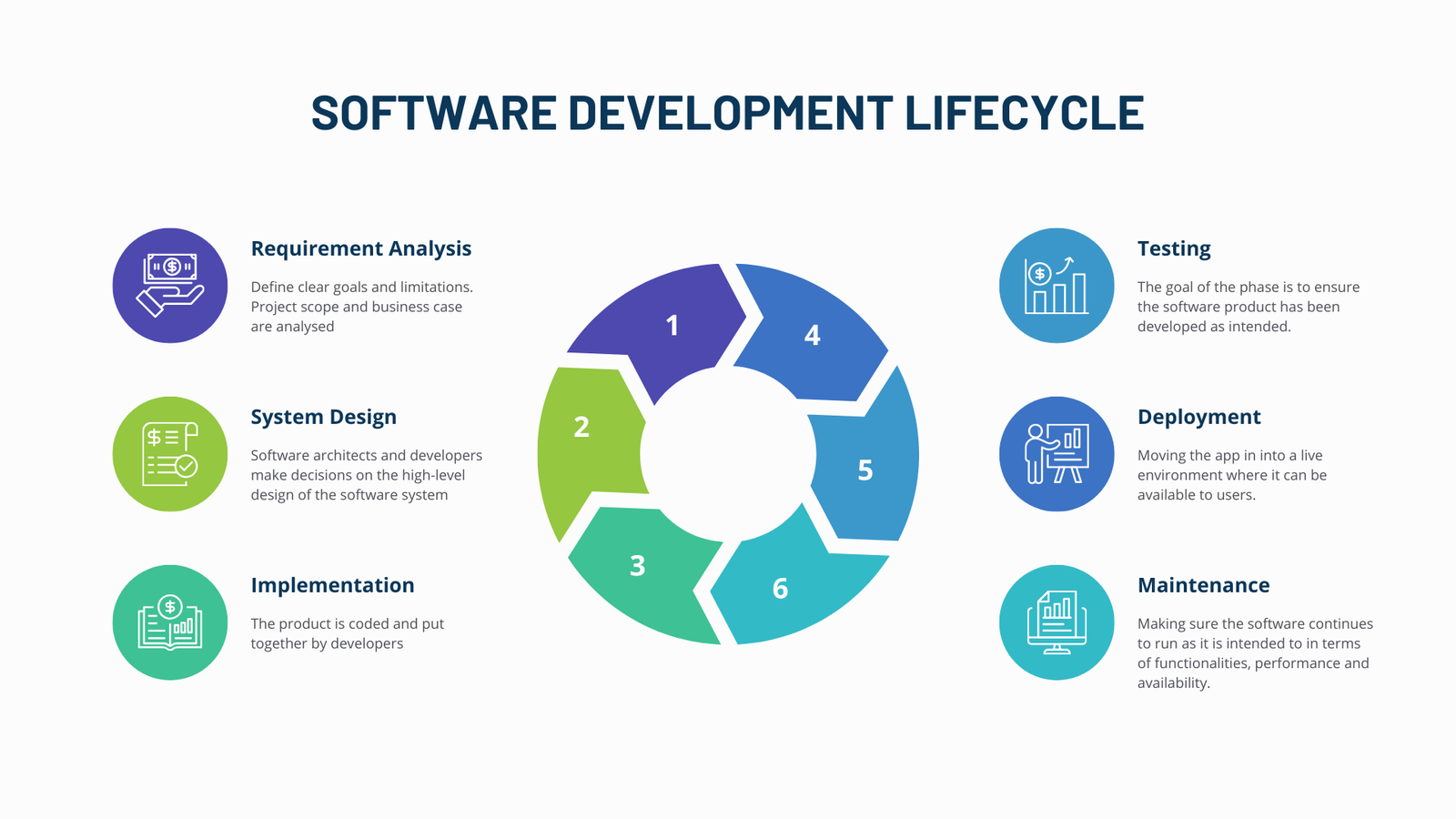

Software Development Lifecycle

Without going into too many details, we will assume everyone is up to speed with the SLDC. In the past, these cycles were more static, or how to say, an entire software cycle for a piece of software would usually run once, nowadays these cycles are usually completed within a shorter period. On my recent project we would have a two-week iteration where entire SDLC would run, and we even considered automating it further to be executed for each major run, a couple of days’ worth of time.

Now, this is a different discussion for some other time, and trends might shift again depending on the context. We can even see the trend going back to one cycle run for an entire software creation, yet again.

The Real Impact Findings

Overall, we’re seeing more subtle changes, affecting each life-cycle:

Analysis and Planning: Enhanced

Requirements gathering is becoming predictive rather than purely reactive. This is the area where LLM’s creativity might become handy, given the pattern of the immense amount of software that is ‘similar enough‘ for the training data to show some good predictive results.

However, where AI truly could shine is analysing the historical data. Therefore, on a fresh project, it might not really be much of a help.

AI excels at pattern recognition, that is for sure, but as we experienced personally, and with this survey, we can extrapolate that it struggles with complex tasks. The human element that usually works with extracting the requirements is nowhere to be automated yet at least.

Organizations are finding that AI serves better as a validation tool in this step.

Design Phase: Architects beware! But not yet.

Architecture documentation and system blueprinting are seeing genuine acceleration.

AI’s ability to generate consistent design artifacts is proving valuable. Given on top of this the possibility of crafting diagrams and easier relational patterns for database structure, we can clearly see a winner in this development step.

However, the data shows some limitations: 63% of developers report AI’s inability to grasp organizational context. This, however, can be improved with company tailoring the LLM to own needs, and even hosting and training their own open source or closed model. But in terms of now and achievable, this remains a clear limitation.

Development: Reshaped, (But Unfortunately) Not Revolutionized

The speed boost isn’t coming from AI writing code. As codebase grows, or the requirements are higher, the code generation can be as limiting as in the previous phase. Lacking context or due to avoiding of feeding it sensitive data, the process of writing code is seen at times as really fast, while the mundane work is taking longer. Longer to review, more bugs that resurface.

Instead were we do see positive aspect: rapid prototyping, boilerplate reduction, and pattern recognition making the.

However, 66% of developers still distrust AI outputs enough to require thorough verification.

Testing: Evolving Towards Prediction

AI systems are identifying potential failure points before they manifest. This is a clear winner.

However, this comes with a caveat: 45% of developers report AI struggling with complex edge cases.

Not to mention that there is a need of human-in-the-loop still, that can still increase cost, more than we imagine it being lowered. As of today, there is speculation of autonomous testing, verifying the live environment having the AI, taking screenshots, evaluating various aspects of the app. But even if the tech is closer than ever to achieving, the cost is pretty much astronomical comparing to a regular tester that can do this pretty fast as well.

Deployment and Documentation: The Unexpected Winners

While code generation draws headaches more than positive aspects, the real transformation is in automation and maintenance.

Documentation, traditionally a pain point, is being revolutionized through automated updates and maintenance. This task is one of the least complex, where the LLM’s do not need to speculate or to cover certain information gaps. The code is all there, so there is no surprise, it provides a great output here.

The irony? The least glamorous parts of development are seeing the most practical benefit, and this is a moment all programmers can stand and appreciate.

Security: Both Enhanced and Compromised

AI tools are getting better at identifying common vulnerabilities. This is a huge winner.

Yet, they’re also introducing new concerns about code reliability and privacy.

The data suggests we’re trading traditional security challenges for new ones. We already know the data LLMs are trained on is a lot of GitHub code that is considered unsafe. 33% of Copilot code contains security flaws.

When you train your LLM on unsecure code, it’s no surprise about the output, and the fact that more and more basic security flaws appear in almost any piece of software that is launched. This is my clickbaity assumption that I extrapolate.

Overall, LLMs are so far here to stay, useful sometimes, annoying in others. Therefore, really similar to a human being (joke).

Survey Conclusions

Almost one in four devs has no plans to use AI coding tools this year, whether because those tools aren’t available to them, they don’t see a use case in their current work, because they lack trust in AI coding tools, or for some other reason.

Overall, the benefits of AI tools for certain tasks:

- Increasing productivity (81%)

- Speed up learning (62%)

- Improving efficiency (58%)

- Improving accuracy in coding (30%)

- See benefits in integration with documenting, testing, and writing code in the following years

Concerns:

- 45% of developers are saying that AI tools are bad or very bad at handling complex tasks

- 66% cited a distrust in the output

- 63% said the tools lack context necessary to understanding their organization codebase, architecture

- Waiting for AI answers disrupts workflow, spending a lot of time looking for answers (53%, 60%)

Methodology

65000 responses from stackoverflow survey.

References

Use of Artificial Intelligence in Software Development Life Cycle: A state of the Art Review (2015)

Comments are closed.