It’s hardly surprising that the intense fight to become the AI ‘top dog’ can be seen by the rapid pace of development set by the ongoing machine learning competitions among major tech companies. This fact tends to overshadow the various security risks that hide beneath the surface.

Here we are now, in a ever demanding fast-paced world with a race to advance machine intelligence that is starting to eclipse the moon race. Pun intended.

A technical analysis on this topic can be explored more in NSFocusGlobal.

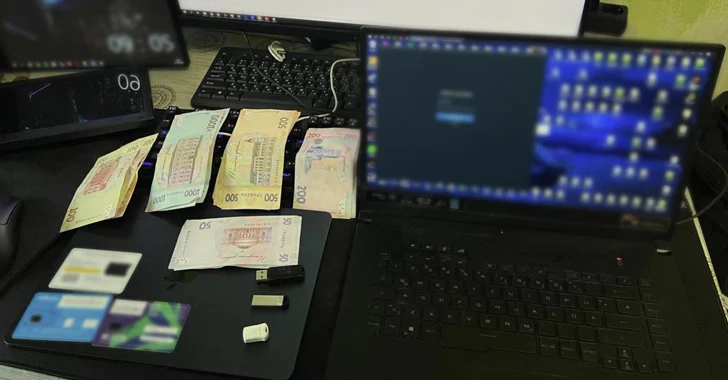

The major risk that a malware-infected machine learning model poses is revealing a vulnerability that even experts might overlook: it’s possible to gain control over the entire machine learning repositories, acquiring their models and data without complex security breaches.

Machine learning environments, which more often then not access sensitive data, are viewed like the new gold rush by cyber attackers due it’s the lack of robust security measures.

Same Security Risks In The Past, Now Targeting Machine Learning Models

Usually this is nothing new for software developers and security experts have encountered similar breaches in node repositories, or python library repositories. In software development we have a saying “code is code”, and funny enough, the same crucial aspects is present in the LM’s. They are a black box, but hidden beneath is essentially code, and as such it can be manipulated in various ways. And depending the enviroment and programming language, attackers can either steal data, or even hijack machines.

The main difference from software development is the fact that ML repository simply do not enforce as many cybersecurity defenses (rutine checks, anti-malaware scans, cryptographic signs, etc). I don’t really understand why and can’t give an answer here, what I assume is the fact that the need for high pace development in the field just ignored altogether the security concerns.

Photo by Star Zhang.

Comments are closed.