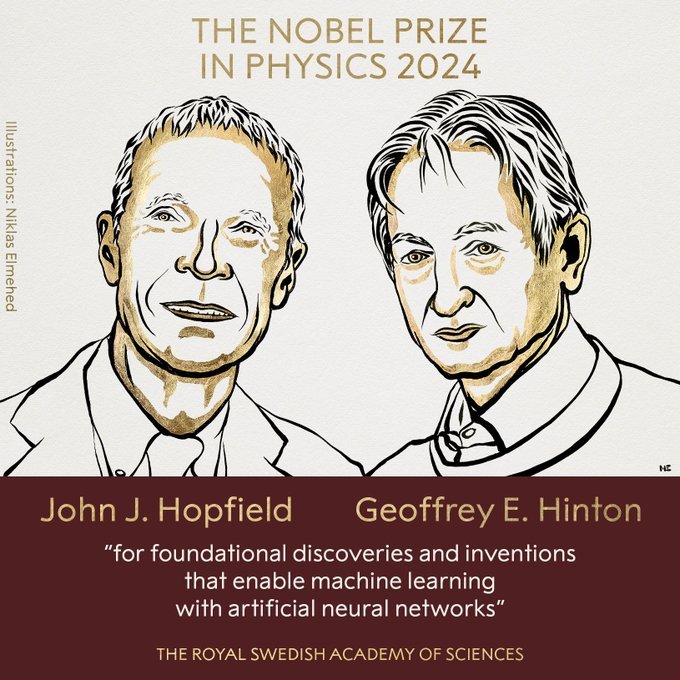

The 2024 Nobel Prize in Physics has been awarded to John J. Hopfield and Geoffrey E. Hinton, on Tuesday 8 October, for their fundamental work in artificial neural networks and machine learning. This decision has also sparked controversy in the scientific community, raising questions about the boundaries between physics and computer science.

As someone who has worked in web development for over a decade, I’ve witnessed firsthand how machine learning and AI have transformed our field. The roots of these technologies, as it turns out, trace back to the work of Hopfield and Hinton in the 1980s.

Hopfield’s 1982 Paper: Neural Networks and Physical Systems

John Hopfield’s seminal 1982 paper, “Neural networks and physical systems with emergent collective computational abilities,“, published in Proceedings of the National Academy of Sciences, was a cornerstone in the development of modern neural networks.

Key aspects of this work include:

- Introduction of the Hopfield network model

- Application of concepts from statistical physics to neural systems

- Description of neural activity in terms of energy states

Hopfield’s model demonstrated how a network of simple units could exhibit complex behavior.

Hinton’s Boltzmann Machine

Geoffrey Hinton, along with Terry Sejnowski, introduced the Boltzmann machine in their 1983 paper “Optimal Perceptual Inference.” Another paradigm shift in artificial intelligence research.

We might argue that the 80’s were the most important years in Computer Science since it’s inception.

The Boltzmann machine imagined neural networks as dynamic systems, drawing inspiration from physics. In this model, neurons could be active or dormant, influencing each other through connections. The overall state was governed by an energy function, mirroring the behavior of particles in physical systems.

Hinton and Sejnowski’s key innovation was the introduction of hidden units. These elements, were not directly observable but instrumental in shaping the network’s behavior. Becoming precursors to the deep, multi-layered architectures that drive today’s AI systems.

Controversy: Physics or Computer Science?

The decision to award a physics prize for work often associated with computer science has sparked debate.

Critics argue mostly about the category mismatch. The primary impact of this work has been in computer science and AI, not traditional physics. This decision might blur the lines between scientific disciplines in future.

As a web developer who has dabbled in machine learning, I can see both sides of this argument. The interdisciplinary nature of this work reflects fundamental advancements that can be related to work in physics, but overall I agree with the category mismatch as well.

The Legacy and Future Implications

Regardless of the controversy, the impact of Hopfield and Hinton’s work is undeniable.

Their foundational work has led to the development of deep learning algorithms that power much of today’s AI technology. And of course, advanced AI systems built on these foundations are now being used to make discoveries in various scientific fields, including astrophysics and theoretical physics.

Featured photo source: https://x.com/NobelPrize

Source